ACTIVITY BASED PERSONALIZED ENVIRONMENTS

Team: Jingyang Liu and Pragya Gupta

Machine Learning | Computer Vision | Python | Fabrication | Electronics

This project developed a machine learning pipeline for activity recognition in a workplace setting using Computer Vision and Scikit Learn. Environmental settings are personalized based on the activity data along with a touch override.

The project attempts to further the idea of having personalized comfort conditions by utilizing activity recognition as a non-intrusive control system.

Input: Personal behavior or group activity with a shared goal

Output: Appliances, potentially scaled up to the whole building system

Activity recognition can be used in a larger context to compute the overall impact of multi-user activity and control building-level systems and appliances. In the future, these activity patterns can be used to optimize the energy management system and controls.

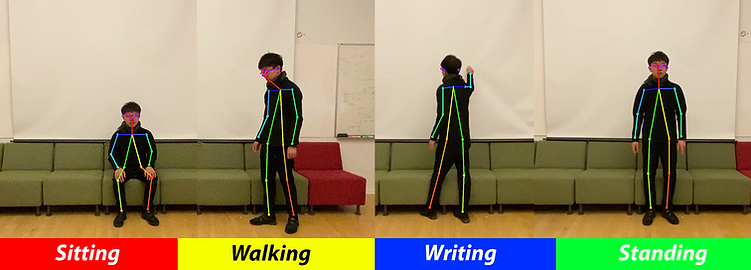

Videos of length ranging from 30 seconds to 2 minutes with a webcam of 640x480 resolution and 10 fps frame rate were used to train the algorithm.

OpenPose library was used to detect the positions of joints in each frame. The window size was 5 frames. The OpenPose library provides 2D positions of 18 joints including head, neck, arms, and legs using a convolutional neural network. Position coordinates are formatted as x and y values proportional to the image size. Thus for one person, 36 values are obtained.

Considering that activities classified in the project are dependent on the motion of the body, we kept the joint information of the neck, arms, and legs. In total, the positions of 14 joints were extracted for each person in the frame.

In case the number of missing joints was larger than 5, the frame was tagged as invalid and replaced by the next closest frame. To maintain the fixed-size of the feature vector, the joint position with no value was replaced with the value of the joint detected in the last frame.

The feature space was constructed using a combination of these joint positions.

Three classifiers were tested for performance - SVM(c=10e3), DNN (3 layers, 50*50*50) and Random Forest(100 trees, depth of 30)

Due to similar accuracy in all three algorithms, the Random Forest Classifier was selected by the team.

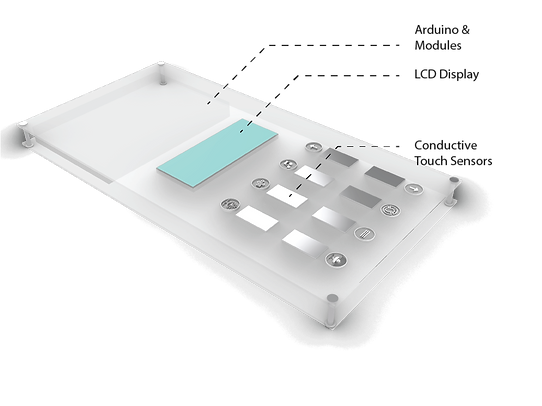

Hardware: Sensors and

Appliances

The primary sensor used is a common digital camera. This should ideally be mounted such that the primary user space is within the scope of vision.

The proposed kit consists of appliances and sensors. The appliances include a table fan, task lighting, and audio system. It also reads the current environmental conditions - lighting levels, humidity, and ambient dry-bulb temperature and displays the same on an LED display. A Photoresistor and ASAIR AM2302 DHT22 module are used for the same.

The easy-to-deploy kit also features a touch override. Using stainless steel metal sheets as capacitive surfaces, the touch sensor is connected to CAP 1188 8-channel capacitive touch sensor breakout board that relays the user’s touch to override system settings including varying the speed of fan, dimming the lamp and pausing, playing and changing the audio. A DF Player with a micro-SD reader is used to control the audio system.